For the most part, people trust their eyes and ears. If you saw a video of a politician bad-mouthing a rival, or listened to a recording of a celebrity admitting to a crime, you would suspect they must be real, right? You might think you can see through Photoshop editing or gauge when a recording is fake. But through technological innovation and groundbreaking artificial intelligence, the divisions between reality and fiction are fading.

Video clips and audio tracks are now possible with AI-driven deep learning, resulting in deepfakes. These deep-fake voices can sound exactly like any specific person, alive or dead. As long as the AI has a good reference, the results are very tough to discern. Some software needs as few as 50 sentences in order to create a deep fake. Scanned images and audio tracks can create cloned faces and voices.

This opens up many possibilities, good and bad. On the one hand, we can bring back a loved one’s voice to life. On the other, bad actors can destroy a a person’s reputation. Deep fake technology is advancing quickly, rife with moral dilemmas and conflicts. In this blog post, learn how some abuse deep fake software, the components of voice over ethics, and why WellSaid Labs has committed to only deploying AI ethically.

What are the ethics of deepfakes and voices?

One recent example of a simulated voice over was in the Anthony Bourdain documentary Roadrunner. The film used AI to make it sound like Bourdain read some of his correspondence aloud. This was not clear to audiences upfront, leading to some confusion and anger when the public found out. While the director has defended the decision by touting the consent of Bourdain’s family (and even that consent is disputed), this usage of a deep fake has remained contentious.

Shouldn’t people have control over the use of their voice? Doesn’t that control include not just a perceived “blessing,” but explicit consent? How will these deepfake voices affect a person’s legacy? Some defend the documentary, arguing that the words themselves were from the late chef and host. But we can’t know the tone or inflections Bourdain would have used, forcing filmmakers to decide these vital details.

Other situations seem potentially more dangerous or insidious. Deep fakes were used to make President Obama seemingly insult his rivals, showing how political points could be won or lost through fake clips and soundbites. A scandal could spin out of thin air, taking up vital time during races to investigate and expose.

But the potential issues with deep-fake voices overflow into other fields as well. Fraudsters can scan and simulate a voice artist’s work en masse without their consent or payment. Voice over ethics are complicated—especially when an app or service may use one person’s voice for a product downloaded by millions of people.

On an individual level, deepfakes perpetrate financial and criminal fraud such as impersonation, evidence falsification, and identity theft.

However, there are ways to reproduce and use voices ethically and effectively—and that’s what we’re here to illustrate.

What does ethical voice over work look like?

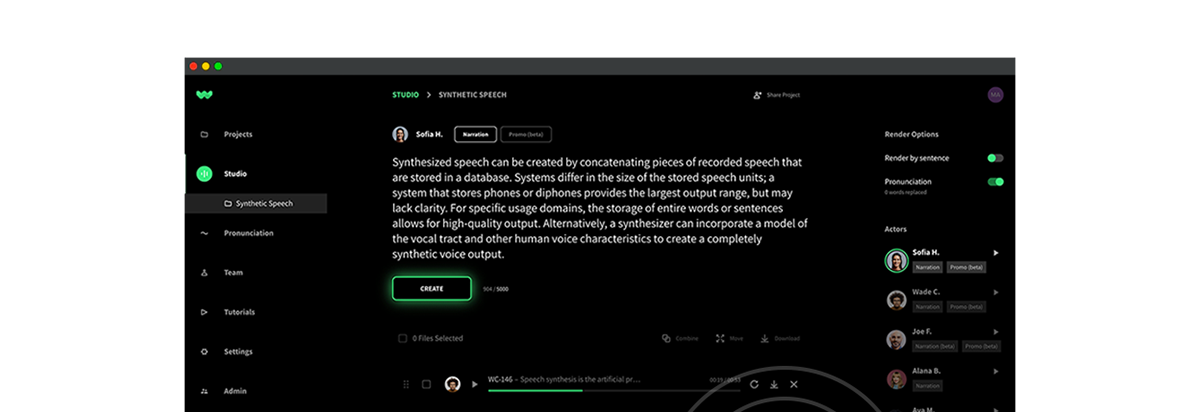

Voice cloning is present in many industries: movies, television, audiobooks, and video games. Online players can shift their voices, busy actor’s lines can be voiced over for advertisements or movie promotions, or lengthy audiobooks can be created under a tight deadline. This software has the potential to help encrypt data or bring history to life. As with most powerful tools, this technology can be incredibly powerful.

From our perspective, this software can and must be done ethically. When a person dies or cannot give direct consent, strict guidelines mean any vocal cloning is done the right way, or not done at all. Compensation and treatment of voice over artists and performers must be fair. This is why, here at WellSaid Labs, we only create AI likenesses of talent who have given written and explicit consent. That way, great work is accessibly by millions—without potentially harming those who help produce it.

How can we support the evolution of ethical AI voice applications and discourage deepfakes?

Many people throw around the word “ethical” in artificial intelligence development circles. But what does that actually mean, particularly with AI voice? At WellSaid Labs, our ethical stance is that we “aspire to benefit all and harm no one.” It extends beyond the consent of voice actors. We prioritize protecting the use of our voice actor’s vocal likeness through content moderation that prohibits obscene, abusive, and fraudulent content. Additionally, voice actors have a right to anonymity and privacy. Finally, part of the equitable relationship between an AI voice provider and the voice actor must include fair compensation.

Outside of ethical business practices, investment by the larger tech community is critical.

While hopefully individual tech companies will treat actors fairly and equitably, more support is needed. Governmental and tech initiatives are well underway. In 2021, 17 state legislatures introduced AI technology legislation. The federal government is also devoting resources to researching AI questions, for example with the National Institute of Standards and Technology through the U.S. Department of Commerce.

Large voluntary initiatives of entities in the AI content community are also a promising source of support for ethical application of this technology. The Content Authenticity Initiative was announced in 2019 as a partnership between Adobe, Twitter, and the New York Times to improve content authenticity. Since then, multi-national companies like Microsoft, BBC, Intel, and others have joined the initiative. All these endeavors work together to improve the ethical considerations in AI voice technology.

Creating sustainable AI voices

There is so much room for creativity and growth with ethical AI-created voices and video. Applications for fast, inexpensive voice work are nearly endless.

Recording audio books or educational material? Absolutely.

Creating captivating learning and development materials? You betcha.

But we must make sure those who put in the work are not taken advantage of and it isn’t manipulating the public. AI voices sound best when there is the permission of the voices behind them, creating a resounding, authentic, and celebrated voice for brands and consumers alike. There is no room for deepfakes.